Game Postmortems

Reviewed by Greg Wilson / 2016-04-26

Keywords: Project Management

Washburn2016 Michael Washburn, Pavithra Sathiyanarayanan, Meiyappan Nagappan, Thomas Zimmermann, and Christian Bird: "What went right and what went wrong". Proceedings of the 38th International Conference on Software Engineering Companion, 10.1145/2889160.2889253.

In game development, software teams often conduct postmortems to reflect on what went well and what went wrong in a project. The postmortems are shared publicly on gaming sites or at developer conferences. In this paper, we present an analysis of 155 postmortems published on the gaming site Gamasutra.com. We identify characteristics of game development, link the characteristics to positive and negative experiences in the postmortems and distill a set of best practices and pitfalls for game development.

This paper is a great example of what can be learned from careful application of qualitative methods. The authors' first step was to categorize statements in the postmortem narratives written by developers; that necessarily required human judgment, but if done carefully, that can be as reproducible as any other kind of scientific work:

Initially, we started with 12 categories of common aspects of development. These categories were based on the categories Ara Shirinian identified in his analysis of postmortem reviews.

In order to identify additional categories, we performed 3 iterations of analysis and identification. The first week, we each read and analyzed 3 postmortem reviews each, classifying the items discussed in each section into the 12 predetermined categories of common aspects that impact development. While analyzing these reviews, we identified additional categories of items that went right or wrong during development, and revisited the reviews we had already analyzed to update the categorization of items. For the next two weeks we repeated this process of analyzing postmortems and identifying categories, analyzing 10 postmortems each in week 2, and 15 postmortems each in week 3. After each iteration, we discussed the additional categories we identified, and determined if they were viable.

After our initial iterations for identifying additional categories, we had completed the analysis of 60 postmortem reviews. We then stopped identifying new categories, and began analyzing postmortems at a combined rate of about 40 postmortem reviews per week. After each week we reviewed what we had done to ensure we both had the same understanding of each category. This continued until we had analyzed all the postmortem reviews.

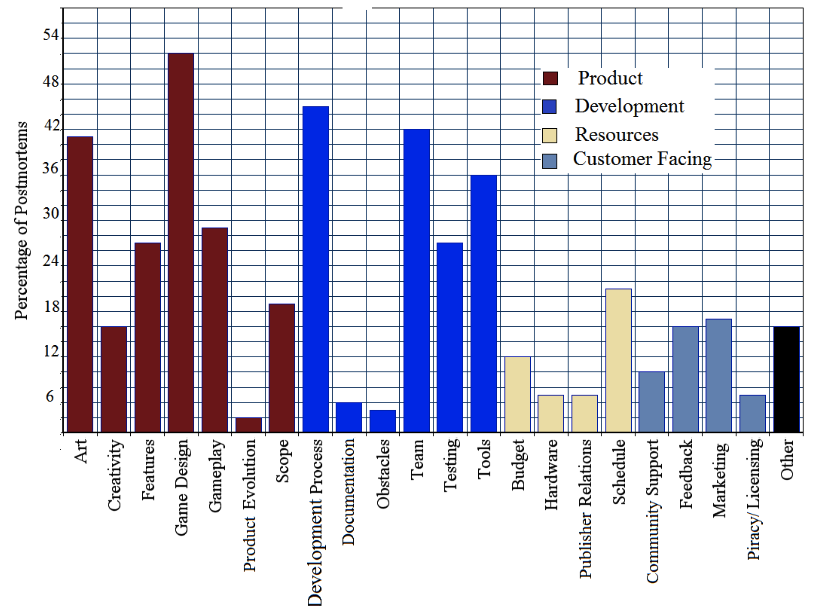

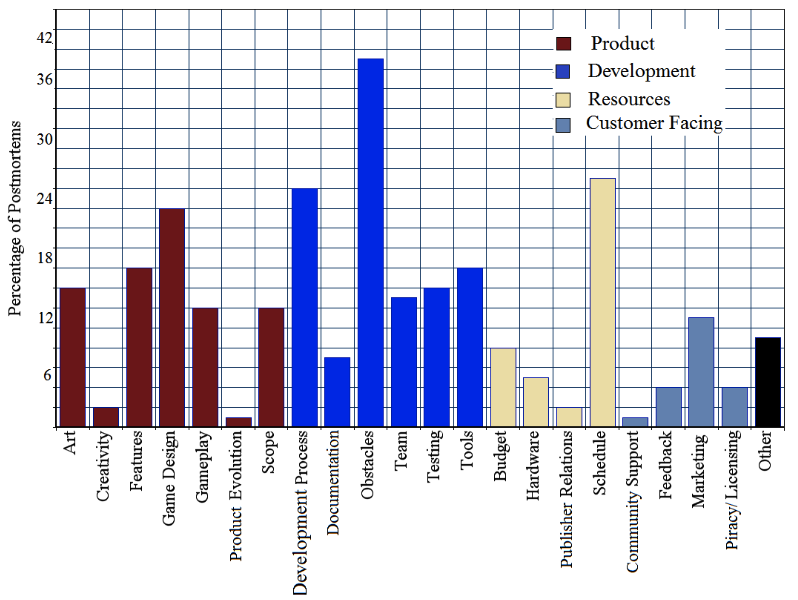

Here are their main findings (with apologies for posting screenshots from a PDF):

What Went Right

What Went Wrong

This isn't the whole story, though: the authors also discuss differences between small and large teams, between developers who used publishers and those who published on their own, and between single-platform and multi-platform games. It would be great to see this paper as required reading in game design classes; it would be even greater if students in those classes were require to do this kind of analysis themselves as an assignment so that they would be more likely to understand it, appreciate it, and act on it.