Test Flakiness Across Programming Languages

Reviewed by Greg Wilson / 2023-03-14

Keywords: Programming Languages, Testing

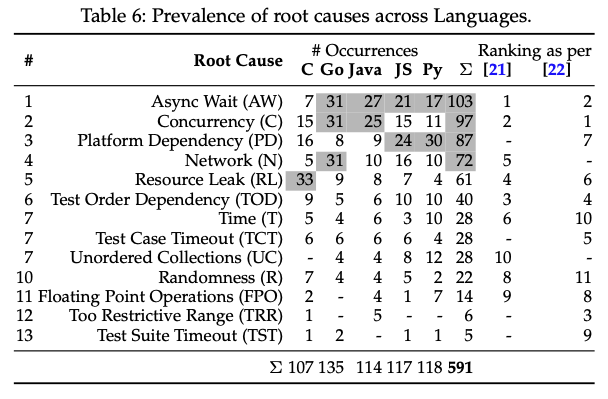

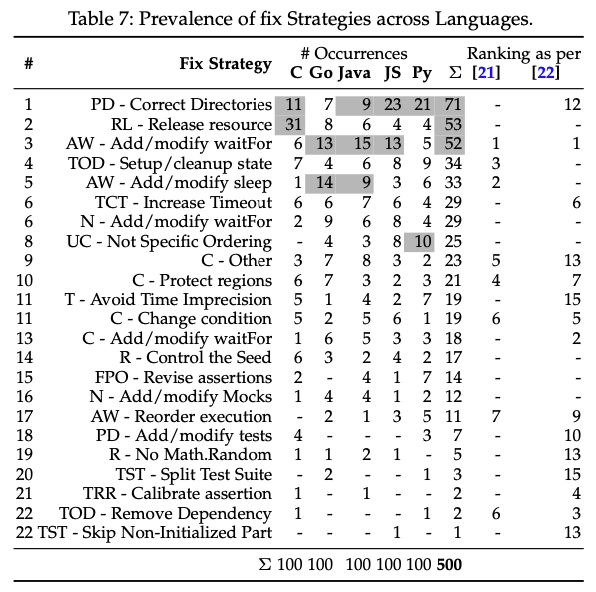

How are programming languages similar, and how do they differ? Most arguments about this are based on lists of language features, but another way to tackle the question is to ask how different languages behave in practice. This paper does that by looking at how flaky tests in C, Go, Java, JavaScript, and Python are similar and how they differ. The authors find that things like concurrency are hard everywhere, but other things (like resource management) vary from language to language. They also found that less than a dozen strategies for fixing flaky tests accounting for 85% of fixes, which suggests that explicitly teaching people bug-fixing and refactoring patterns for tests would be beneficial. Finally, they found that people either fix flaky tests right away or leave them broken for a long time, suggesting either that some tests are useful and some are not, or that some projects have a culture of clean coding and others don't with little middle ground.

Keila Costa, Ronivaldo Ferreira, Gustavo Pinto, Marcelo d'Amorim, and Breno Miranda. Test flakiness across programming languages. IEEE Transactions on Software Engineering, pages 1–14, 2022. doi:10.1109/tse.2022.3208864.

Regression Testing (RT) is a quality-assurance practice commonly adopted in the software industry to check if functionality remains intact after code changes. Test flakiness is a serious problem for RT. A test is said to be flaky when it non-deterministically passes or fails on a fixed environment. Prior work studied test flakiness primarily on Java programs. It is unclear, however, how problematic is test flakiness for software written in other programming languages. This paper reports on a study focusing on three central aspects of test flakiness: concentration, similarity, and cost. Considering concentration, our results show that, for any given programming language that we studied (C, Go, Java, JS, and Python), most issues could be explained by a small fraction of root causes (5/13 root causes cover 78.07% of the issues) and could be fixed by a relatively small fraction of fix strategies (10/23 fix strategies cover 85.20% of the issues). Considering similarity, although there were commonalities in root causes and fixes across languages (e.g., concurrency and async wait are common causes of flakiness in most languages), we also found important differences (e.g., flakiness due to improper release of resources are more common in C), suggesting that there is opportunity to fine tuning analysis tools. Considering cost, we found that issues related to flaky tests are resolved either very early once they are posted (<10 days), suggesting relevance, or very late (>100 days), suggesting irrelevance.